-

Select your language

English

繁體中文

日本語

Italiano

Русский

Deutsch

简体中文

한국어

Español

- Digital Annual Event

- Join Now >

- SOLUTIONS FOR

-

Data Store & Protection

-

Infrastructure

-

Industries

- BY DATA SYSTEM

-

Block Storage

-

File Storage

- QSAN HOUR - Modern Cloud Collaboration Strategies

- Watch the video >

- OUR PARTNERS

-

Technology Partners

- BY FLASH TYPE

-

All-Flash Storage

-

Hybrid Flash Storage

- SOFTWARE

-

Management System

-

Storage Utilities

-

INDUSTRIES

- HPC and AI Applications

- MSP

- Higher Education

- Media Inline

-

INFRASTRUCTUE

- Cloud Collaboration

- DevOps

- VDI

- Work from Home

- High Speed

- IT Infrastructure

- Virtualization Storage

-

DATA STORE & PROTECTION

- Large Scale Surveillance

- Business Backup

- All-Flash Array

- Data Security

- Data Protection

-

ALL NEW XCUBESAN

-

XCubeSAN 5300 Series

-

XCubeSAN 3300 Series

-

" >

-

FLASH SYSTEM

-

XEVO

-

BLOCK SYSTEM

-

XCubeFAS Series

-

UNIFIED STORAGE

-

XCubeNXT 8100 Series

-

XCubeNXT 5100 Series

-

ALL FLASH ARRAY

-

XCubeFAS Series

-

ALL NEW NAS

-

XCubeNAS 8100R Series

-

XCubeNAS 5100R Series

-

FILE SYSTEM

-

XCubeNXT 8100 Series

-

XCubeNXT 5100 Series

-

HYBRID FLASH STORAGE

-

XCubeSAN 5300 Series

-

XCubeSAN 3300 Series

-

FILE SYSTEM

-

QSM

-

FILE SYSTEM

-

XCubeNAS 8100R Series

-

XCubeNAS 5100R Series

-

NETWORK ATTACHED STORAGE

-

XCubeNAS 8000 Series

-

XCubeNAS 7000 Series

-

XCubeNAS 5000 Series

-

XCubeNAS 3000 Series

-

XCUBENXT 5100 SERIES

- XN5126

-

XCUBENXT 8100 SERIES

- XN8126

-

Explore the Modern World Driven by DataJoin us to explore more about critical storage technologies powered by our reliable partners, Veeam and Western Digital. With their insight, the annual event will bring the industry a comprehensive view of the next generation of innovative data applications.

Explore the Modern World Driven by DataJoin us to explore more about critical storage technologies powered by our reliable partners, Veeam and Western Digital. With their insight, the annual event will bring the industry a comprehensive view of the next generation of innovative data applications. -

QSM

- Overview

- Hybrid SSD Cache

- Deduplication

- Always-On Technology

- Store Data Completely

- All Business in QSM

-

SANOS

- Overview

- QReplica 3.0

-

XCUBEFAS SERIES

- XF3126

-

XEVO

- Overview

- Native SSD Monitoring

- System Usage Analysis

- Simplified Deployment

- Higher Bandwidth

- Storage Efficiency

- Great Flexibility

- Cloud Native

- QReplica 3.0

-

CUSTOMER SUCCESS

- New CB Party Culture & Arts

-

CUSTOMER SUCCESS

- Iowa-Based University

-

CUSTOMER SUCCESS

- Taiwan Fund for Children and Families

-

CUSTOMER SUCCESS

- TaiDoc Technology Corporation

-

CUSTOMER SUCCESS

- IT Forensic Services Provider

-

CUSTOMER SUCCESS

- DSC Unternehmensberatung und Software GmbH

-

CUSTOMER SUCCESS

- Leading Internet Service Provider(ISP) in the USA

-

CUSTOMER SUCCESS

- Agricultural and Forestry Aviation Survey Office

-

CUSTOMER SUCCESS

- Geomatic Department in Turkey

-

CUSTOMER SUCCESS

- Kazan National Research Technological University

-

CUSTOMER SUCCESS

- TSys Uses QSAN NVMe All-Flash for 3000 VMs

-

CUSTOMER SUCCESS

- National Institute of Geophysic and Volcanology

-

CUSTOMER SUCCESS

- XCubeSAN for Over 100 VMs Hosting

-

CUSTOMER SUCCESS

- Non-profit Cloud Services Provider

-

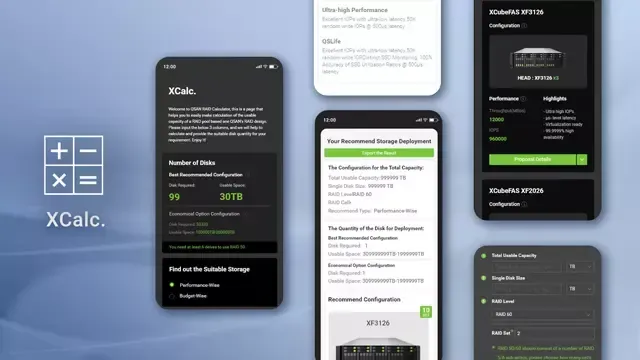

TRY IT NOW

- QSM Demo

-

TRY IT NOW

- SANOS Demo

-

TRY IT NOW

- XEVO Demo

-

XCUBENAS 8000 TOWER

- XN8008T

- Products

- Solution

- Support

- Company

-

- Select your language

- Digital Annual Event

- Join Now >

- SOLUTIONS FOR

-

Data Store & Protection

-

Infrastructure

-

Industries

- BY DATA SYSTEM

-

Block Storage

-

File Storage

- QSAN HOUR - Modern Cloud Collaboration Strategies

- Watch the video >

- OUR PARTNERS

-

Technology Partners

- BY FLASH TYPE

-

All-Flash Storage

-

Hybrid Flash Storage

- SOFTWARE

-

Management System

-

Storage Utilities

-

INDUSTRIES

- HPC and AI Applications

- MSP

- Higher Education

- Media Inline

-

INFRASTRUCTUE

- Cloud Collaboration

- DevOps

- VDI

- Work from Home

- High Speed

- IT Infrastructure

- Virtualization Storage

-

DATA STORE & PROTECTION

- Large Scale Surveillance

- Business Backup

- All-Flash Array

- Data Security

- Data Protection

-

ALL NEW XCUBESAN

-

XCubeSAN 5300 Series

-

XCubeSAN 3300 Series

-

" target="_self">

-

FLASH SYSTEM

-

XEVO

-

BLOCK SYSTEM

-

XCubeFAS Series

-

UNIFIED STORAGE

-

XCubeNXT 8100 Series

-

XCubeNXT 5100 Series

-

ALL FLASH ARRAY

-

XCubeFAS Series

-

ALL NEW NAS

-

XCubeNAS 8100R Series

-

XCubeNAS 5100R Series

-

FILE SYSTEM

-

XCubeNXT 8100 Series

-

XCubeNXT 5100 Series

-

HYBRID FLASH STORAGE

-

XCubeSAN 5300 Series

-

XCubeSAN 3300 Series

-

FILE SYSTEM

-

QSM

-

FILE SYSTEM

-

XCubeNAS 8100R Series

-

XCubeNAS 5100R Series

-

NETWORK ATTACHED STORAGE

-

XCubeNAS 8000 Series

-

XCubeNAS 7000 Series

-

XCubeNAS 5000 Series

-

XCubeNAS 3000 Series

-

XCUBENXT 5100 SERIES

- XN5126

-

XCUBENXT 8100 SERIES

- XN8126

-

Best Storage for AIThe convergence of HPC and AI is changing the world. The new era requires new storage solutions to handle growing data in an efficient manner.

Best Storage for AIThe convergence of HPC and AI is changing the world. The new era requires new storage solutions to handle growing data in an efficient manner.-

Accelerate Media WorkflowsWith the increase of high-quality formats and the continuous improvement of hardware resolution, the existing technology and infrastructure equipment are extremely.

Accelerate Media WorkflowsWith the increase of high-quality formats and the continuous improvement of hardware resolution, the existing technology and infrastructure equipment are extremely.-

Digital Education TransformationSmart learning, easy management. Enlighten students with a powerful learning system and innovative data research. The solutions in reducing the complexity in education and digital data management create a win-win environment for higher education

Digital Education TransformationSmart learning, easy management. Enlighten students with a powerful learning system and innovative data research. The solutions in reducing the complexity in education and digital data management create a win-win environment for higher education-

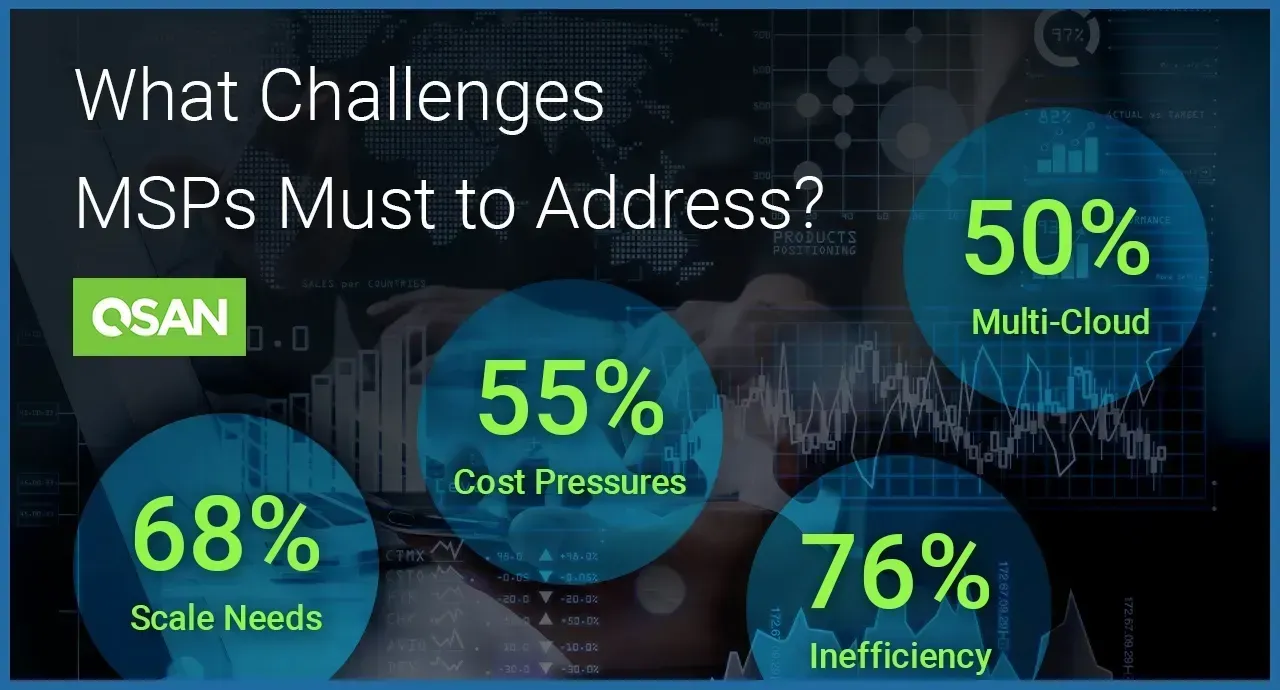

Managed Service ProviderSolutions for managed service providers in helping alleviate risk, reducing complexity, lowering TCO, and building a safe SLA guarantee infrastructure.

Managed Service ProviderSolutions for managed service providers in helping alleviate risk, reducing complexity, lowering TCO, and building a safe SLA guarantee infrastructure.-

Virtualization IntegrationVirtualization can meet the IT needs of sustainable growth companies and is an indispensable and important solution for enterprise growth and transformation.

Virtualization IntegrationVirtualization can meet the IT needs of sustainable growth companies and is an indispensable and important solution for enterprise growth and transformation.-

Effectively Integrate IT ServicesDigital information is an important asset for businesses. How do you structure the right IT environment to meet massive data growth, dig out valuable information and improve business profitability?

Effectively Integrate IT ServicesDigital information is an important asset for businesses. How do you structure the right IT environment to meet massive data growth, dig out valuable information and improve business profitability?-

High Speed ConnectivityHigher bandwidth and better performance.

High Speed ConnectivityHigher bandwidth and better performance.-

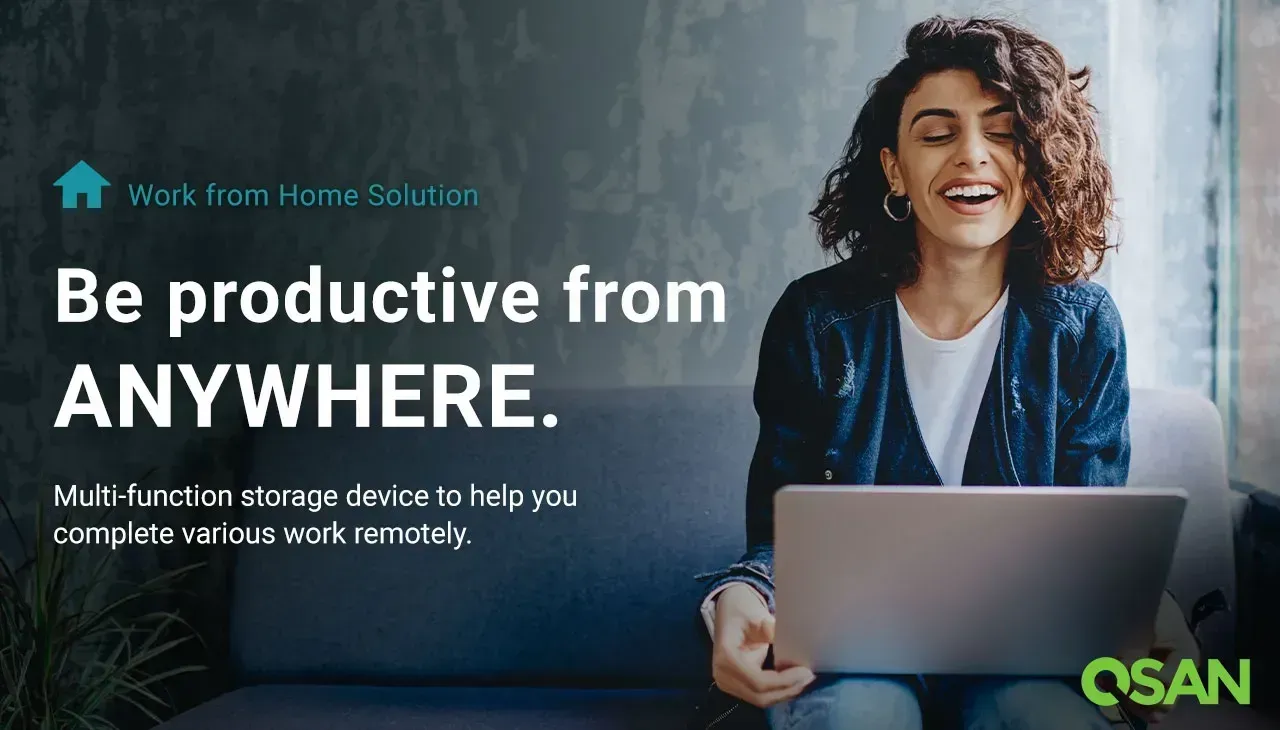

Ensure Remote Work ProductivityMulti-function storage device to help you complete various work remotely.

Ensure Remote Work ProductivityMulti-function storage device to help you complete various work remotely.-

Efficiency Virtual Desktop InfrastructureExperience the Smooth Virtual Desktop Infrastructure(VDI) in the Cloud. Deploy VMWare Hyper-V, and Citrix VDI with Efficient and Affordable QSAN Storage.

Efficiency Virtual Desktop InfrastructureExperience the Smooth Virtual Desktop Infrastructure(VDI) in the Cloud. Deploy VMWare Hyper-V, and Citrix VDI with Efficient and Affordable QSAN Storage.-

DevOps AccelerationThe productivity of developers determines the speed of product innovation. The efficient storage for DevOps accelerates the entire development and production process to empower the competitiveness of the company.

DevOps AccelerationThe productivity of developers determines the speed of product innovation. The efficient storage for DevOps accelerates the entire development and production process to empower the competitiveness of the company.-

High Availability Data ProtectionData is the core competitiveness of enterprises, Whether it is a hardware failure or an accident, the disaster of data corruption will directly reduce the competitiveness of enterprises.

High Availability Data ProtectionData is the core competitiveness of enterprises, Whether it is a hardware failure or an accident, the disaster of data corruption will directly reduce the competitiveness of enterprises.-

Data Security is EverythingSecure the vital business data from unauthorized disclosure, modification or destruction.

Data Security is EverythingSecure the vital business data from unauthorized disclosure, modification or destruction.-

AFA Procurement StrategyMoving to the flash arena, you might want to start with a SMB/entry-level all-flash array. But in the current market, whether the one is just a faster Hybrid Array?

AFA Procurement StrategyMoving to the flash arena, you might want to start with a SMB/entry-level all-flash array. But in the current market, whether the one is just a faster Hybrid Array?-

Secure All of Your Business DataData is the most important asset for the business. Disaster happens in a random manner, business will be in danger without the protection of their data and cause significant losses for further success.

Secure All of Your Business DataData is the most important asset for the business. Disaster happens in a random manner, business will be in danger without the protection of their data and cause significant losses for further success.-

Video Surveillance StorageIn order to be able to collect richer data, high-definition cameras bring higher bandwidth requirements, increase in writing speed and storage capacity.

Video Surveillance StorageIn order to be able to collect richer data, high-definition cameras bring higher bandwidth requirements, increase in writing speed and storage capacity.-

Hybrid Cloud ManagementCloud collaboration bridges between computing platforms. Through the diversified implementation of public and private clouds.

Hybrid Cloud ManagementCloud collaboration bridges between computing platforms. Through the diversified implementation of public and private clouds.-

Unify Data ManagementUnify data and manage from anywhere, through simplified platforms and detailed system information, achieve more smarter data management.

Unify Data ManagementUnify data and manage from anywhere, through simplified platforms and detailed system information, achieve more smarter data management.-

Explore the Modern World Driven by DataJoin us to explore more about critical storage technologies powered by our reliable partners, Veeam and Western Digital. With their insight, the annual event will bring the industry a comprehensive view of the next generation of innovative data applications.

Explore the Modern World Driven by DataJoin us to explore more about critical storage technologies powered by our reliable partners, Veeam and Western Digital. With their insight, the annual event will bring the industry a comprehensive view of the next generation of innovative data applications.-

QSM

- Overview

- Hybrid SSD Cache

- Deduplication

- Always-On Technology

- Store Data Completely

- All Business in QSM

-

SANOS

- Overview

- QReplica 3.0

-

XCUBEFAS SERIES

- XF3126

-

XEVO

- Overview

- Native SSD Monitoring

- System Usage Analysis

- Simplified Deployment

- Higher Bandwidth

- Storage Efficiency

- Great Flexibility

- Cloud Native

- QReplica 3.0

-

CUSTOMER SUCCESS

- New CB Party Culture & Arts

-

CUSTOMER SUCCESS

- Iowa-Based University

-

CUSTOMER SUCCESS

- Taiwan Fund for Children and Families

-

CUSTOMER SUCCESS

- TaiDoc Technology Corporation

-

CUSTOMER SUCCESS

- IT Forensic Services Provider

-

CUSTOMER SUCCESS

- DSC Unternehmensberatung und Software GmbH

-

CUSTOMER SUCCESS

- Leading Internet Service Provider(ISP) in the USA

-

CUSTOMER SUCCESS

- Agricultural and Forestry Aviation Survey Office

-

CUSTOMER SUCCESS

- Geomatic Department in Turkey

-

CUSTOMER SUCCESS

- Kazan National Research Technological University

-

CUSTOMER SUCCESS

- TSys Uses QSAN NVMe All-Flash for 3000 VMs

-

CUSTOMER SUCCESS

- National Institute of Geophysic and Volcanology

-

CUSTOMER SUCCESS

- XCubeSAN for Over 100 VMs Hosting

-

CUSTOMER SUCCESS

- Non-profit Cloud Services Provider

-

TRY IT NOW

- QSM Demo >

-

TRY IT NOW

- SANOS Demo >

-

TRY IT NOW

- XEVO Demo >

-

XCUBENAS 8000 TOWER

- XN8008T

-

Select your language

Find Local Partners to SupportEnglish

繁體中文

日本語

Italiano

Русский

Deutsch

简体中文

한국어

Español

Your message has been sent -

-

-

Frequent Asked Question -

-

-

-

-

-

-

-

-

-

-

-

-

-

-